Effectiveness of AI Tools in Software Development

By Aryan Tamrakar, Siham Boumalak, Chudah Yakung

December 17, 2023

Effectiveness of AI Tools in Software Development: A Case Study Using Ruby on Rails

Vivi Dynamics AMRE Team

Aryan Tamrakar \ Siham Boumalak \ Chudah Yakung

Applied Methods and Research Experience, The College of Wooster

July 7th, 2023

Table of Contents

1.1 Information about Vivi Dynamics

1.2 Types of AI Tools in Software Development

1.2.1 ChatGPT

1.2.2 Google Bard

1.2.3 GitHub Copilot

1.3 Roles of AI Tools in Software Development

2.1 The Various Stages of the Development Process

2.1.1 Setup

2.1.2 Git/GitHub

2.1.3 Learning Ruby on Rails

2.1.4 Coding Tasks (Backend and frontend)

2.1.5 Tests

2.1.6 Code Review

2.2 Ensuring the Quality of Work

2.3 Recording the Interactions with AI

3.1.1 AI Interactions Among All Participants

3.1.2 AI Interactions for Individual Participants

3.1.3 Re-prompting During AI Interactions

3.3 Best Practices

6 APPENDIX

Abstract

This research paper investigates the effectiveness of artificial intelligence (AI) tools, specifically ChatGPT and Bard, in enhancing the productivity of inexperienced software developers. As artificial intelligence continues to advance, the integration of AI tools in software development processes is becoming increasingly prevalent. This study aims to comprehensively evaluate the usefulness of ChatGPT and Bard on key aspects of software development, including code generation, code review, debugging, and testing.

The research methodology includes a mixed-methods approach, combining quantitative analysis and qualitative feedback from the team’s experience as software developers. The quantitative approach involves recording daily interactions with ChatGPT and Bard and assessing their effectiveness in various development tasks, such as code generation, testing, code review, and debugging. These assessments are based on the frequency of inquiries made to the AI tools and the need for repeated or clarified questions. The collected data is analyzed to draw meaningful conclusions about the potential of these AI tools to replace senior developers who mentor and assist junior developers, as well as the possibility of AI tools eventually replacing certain developer roles.

The findings of this research will help software development teams, project managers, and researchers in making informed decisions about the integration of AI tools into their workflows.

Keywords: AI tools, ChatGPT, Bard, software development, productivity, code generation, problem-solving, code quality, qualitative analysis, quantitative analysis.

1 Introduction

With the rapid evolution of software development, developers are constantly seeking ways to improve productivity, efficiency, and innovation. One promising avenue that has gained considerable attention is the integration of artificial intelligence (AI) tools into the software development process. In this report, the researchers demonstrate the effectiveness of AI tools, namely ChatGPT and Google Bard, by integrating new functionalities into a Rails-based web application. In this case study, we aim to assess the impact of these AI tools on productivity and code quality. Additionally, we will explore how AI tools affect the learning of developers in understanding complex code patterns and programming concepts.

Our research, conducted at the College of Wooster by a team of three researchers for the Vivi Dynamics Company through the Applied Method Research Experience (AMRE) program, seeks to provide an empirical perspective on the role of AI tools in software development. We implemented this case study by using AI tools to develop new features for the Vivi Dynamics website. We recorded each interaction with the AI tools and rated the helpfulness of each interaction.

1.1 Information about Vivi Dynamics

Vivi Dynamics is a startup company founded by Jason Berry which specializes in providing technological and innovative services to clients, such as software development. The company’s website is a Rails application with an admin panel that includes a front end and a back end.

1.2 Types of AI Tools in Software Development

There are several types of AI tools commonly used in software development, like Codota, DeepCode AI, ChatGPT, Bard, and GitHub Copilot. However, ChatGPT, Bard, and GitHub Copilot were the tools used in the research.

1.2.1 ChatGPT

ChatGPT is an intelligent chatbot developed by OpenAI and launched on November 30, 2022. However, its training data goes up until September 2021, and it has not been updated with new information since then. Furthermore, ChatGPT harnesses the power of AI to understand and generate natural language and offers contextual suggestions, answers queries, and aids in code generation and documentation.

1.2.2 Google Bard

Google Bard is an AI chatbot experiment by Google. It is based on the Pathways Language Model 2 (PaLM 2), a large language model (LLM) released in late 2022. PaLM 2 is trained on a dataset of 3.6 trillion tokens, which is a basic unit of text, such as a word or a punctuation mark. This dataset is about five times larger than the dataset used to train the previous version of PaLM. It can also generate text, code, and answer questions in an informative way.

1.2.3 GitHub Copilot

GitHub Copilot is an AI-powered tool designed to assist developers with coding by providing relevant suggestions. As an AI pair programmer, it uses context from your current file and related files, as well as your natural language comments, to provide intelligent code completion. Powered by OpenAI Codex, GitHub Copilot is trained on a vast corpus of public code repositories, offering excellent support for well-represented languages like JavaScript while offering varied results for languages with less representation.

1.3 Roles of AI Tools in Software Development

AI tools play a crucial role in enhancing productivity and efficiency in software development through their ability to analyze code and identify common coding patterns, point out potential bugs, and suggest optimizations for better performance. As a result, developers can significantly reduce the time and effort required for manual code review and optimization, leading to improved code quality and shorter development cycles.

AI tools like ChatGPT and Bard offer various functionalities that can benefit software development. These include debugging assistance, where they can identify errors and suggest fixes based on natural language descriptions or stack traces of errors. Additionally, they can generate test cases and test data by understanding natural language descriptions of desired scenarios, thereby enhancing the efficiency of software testing. Moreover, these models excel in natural language processing tasks such as analyzing user requirements, generating user interfaces, and enabling interactions with users through chatbots. Lastly, they can generate software documentation based on natural language descriptions, saving time for developers, and improving the quality of documentation in the process.

2 Methodology

The Vivi Dynamics team of AMRE consists of three student developers and two professors from the College of Wooster who served as supervisors. Together, they explored the integration of ChatGPT and Bard into the software development process for Vivi Dynamics in a seven-week study. These AI tools are employed as real-time coding assistants in the exploratory and iterative development methodology. The tasks were given in Trello cards by the client, who is also a seasoned software engineer. The client prioritized the Trello cards.

To gauge the effectiveness of the AI tools, the students conducted a systematic data collection process. The team documented each interaction with the AI during the website's development, including both the queries made to the AI and the corresponding responses received. The usefulness of each AI response is evaluated by the participant posing the query. Each interaction is classified as falling into one of six categories of purpose: code generation snippets, code debugging assistance, code reviews, generation of feature tests, environment setup, and version control and collaboration facilitated through GitHub.

One interaction is defined as a series of prompts beginning with a single query and any re-prompts, i.e., follow-up questions or modifications to the initial question; in this way, an interaction is analogous to a thread or conversation. Some of these follow-up questions involve inquiries about the significance of specific functions and their potential impact on the code logic of other parts of the code. The collected information includes details about each interaction with the AI tool. This includes the specific Trello or task card being worked on, the participant interacting with the AI tool, the AI tool itself (ChatGPT or Bard), a brief description of the interaction, the category of the task (such as setup, code generation, debugging, tests, code review, or GitHub tasks), the number of re-prompts within the interaction, the week number (1-7) when the interaction took place, and a rating of the helpfulness of the AI tool's response on a 4-point Likert scale. On this scale, a rating of 1 indicates that the response is very unhelpful, such that a sizable portion of the output has incorrect logic or incorrect syntax, which leads the team of developers to an extensive debugging. A rating of 2 suggests that the response is somewhat unhelpful, since it provides partial assistance but still includes incorrect suggestions that cause bugs in the code. While some parts of the output may have had correct logic, a substantial portion of the code is inaccurate or misleading. A rating of 3 signifies that the response is somewhat helpful, such that more than half of the output is correct. Although it also requires significant modification for seamless integration into the codebase, it provides developers with valuable hints and a sense of direction. Lastly, a rating of 4 denotes that the response is extremely helpful since it follows the right logic without requiring any significant modifications or adjustments for accuracy and functionality. Consequently, the code could be successfully integrated into the final codebase.

2.1 The various stages of the development process

The development process for Vivi Dynamics website is categorized in six distinct stages as mentioned above. Each of these stages contributes a unique element to the final product, ensuring its overall functionality, stability, and quality. This process utilizes the expertise of the team members who are also facilitated by the assistance of AI tools. These stages are as follows: setup, Git/GitHub, learning Ruby on Rails, coding tasks, tests, and code review.

2.1.1 Setup

To develop Vivi Dynamics' website using AI tools, participants begin by setting up the environment to run the website locally. They do this by pulling it from a GitHub repository and running it on their local machines. Each team member then prompts ChatGPT and Bard with specific questions and concerns, asking for step-by-step instructions on how to set up the Rails environment on their systems.

2.1.2 Git/GitHub

As software developers working in a team, using a version control system such as Git and GitHub is a crucial part of the development process. The participants, who are the software developers of the project, utilized Git and GitHub for version control, collaboration, and code review. This requires various commands to be written so the written code is safe and usable by all the participants.

2.1.3 Learning Ruby on Rails

Prior to this project, the three team members did not have any experience with Ruby on Rails. Therefore, the team utilized the AI tools for learning the Ruby language and the Ruby on Rails framework. Each participant posed questions ranging from understanding the basics of Ruby syntax and Rails conventions to deeper insights into the Rails MVC architecture and database management.

2.1.4 Coding Tasks (Backend and Frontend)

Upon the research participants’ completion of familiarizing themselves with the concepts of Ruby on Rails, the team began developing additional features to the website as directed by the CEO and Founder of Vivi Dynamics, Jason Berry. Trello, a project management tool, was used to monitor and control these tasks. The Trello cards acted as an explicit visual representation of the project's progress throughout the seven-week development phase. Over this duration, 14 Trello cards were successfully completed. These are detailed below:

- Ability to categorize a Contact as a LEAD.

- Ability to categorize a Contact as JUNK.

- Ability to filter JUNK Contacts from the main Contact list page.

- Ability to protect the website from spam Contacts.

- Ability to manually create a Prospective Client.

- Ability to see a list of Prospective Clients from the admin menu.

- Ability to convert a LEAD Contact into a Prospective Client.

- Ability to edit a Prospective Client to fix typos and other mistakes.

- Propose a way to handle Alumni and Contractors of Vivi Dynamics as User Types.

- Ability to promote a Prospective Client to become a Client.

- Ability to prevent submissions of the Contact form using the company’s email.

- Ability to create Client projects.

- Ability for Alumni to post a Blog Post.

- Ability to demote a Prospective Client.

The integration of the code into the main codebase for these features went through multiple tests, code reviews, and approval from the client.

2.1.5 Tests

Prior to the project, the three team members had not written tests for a software project. Fortunately, the project had a pre-existing test suite that could be referenced for future tests. Any time a new feature was implemented, tests were added to verify it was correct. It was ensured that all existing and new tests passed locally before pushing to the central repository. Additionally, continuous integration testing was performed via GitHub hooks whenever commits are pushed.

2.1.6 Code Review

The final stage of the participants’ code before deployment is the code review. It is a crucial part of the project as it gives the team members a chance to criticize other team members' code. This collaborative scrutiny ensures the code is of the highest quality and remains comprehensible to newcomers. When one student team member completes a Trello card task, the other two student team members perform a code review.

2.2 Ensuring the Quality of Work

To ensure the quality of the work and the integrity of the data being collected, the team frequently met with the client and supervisors. The team met daily with the two supervisors and provided brief daily updates to the client via Slack. The entire team held weekly virtual meetings with the client, which consisted of a demo of all the features added in the past week, feedback from the client on progress, and direction on tasks for the coming week.

2.2 Recording the Interactions with AI

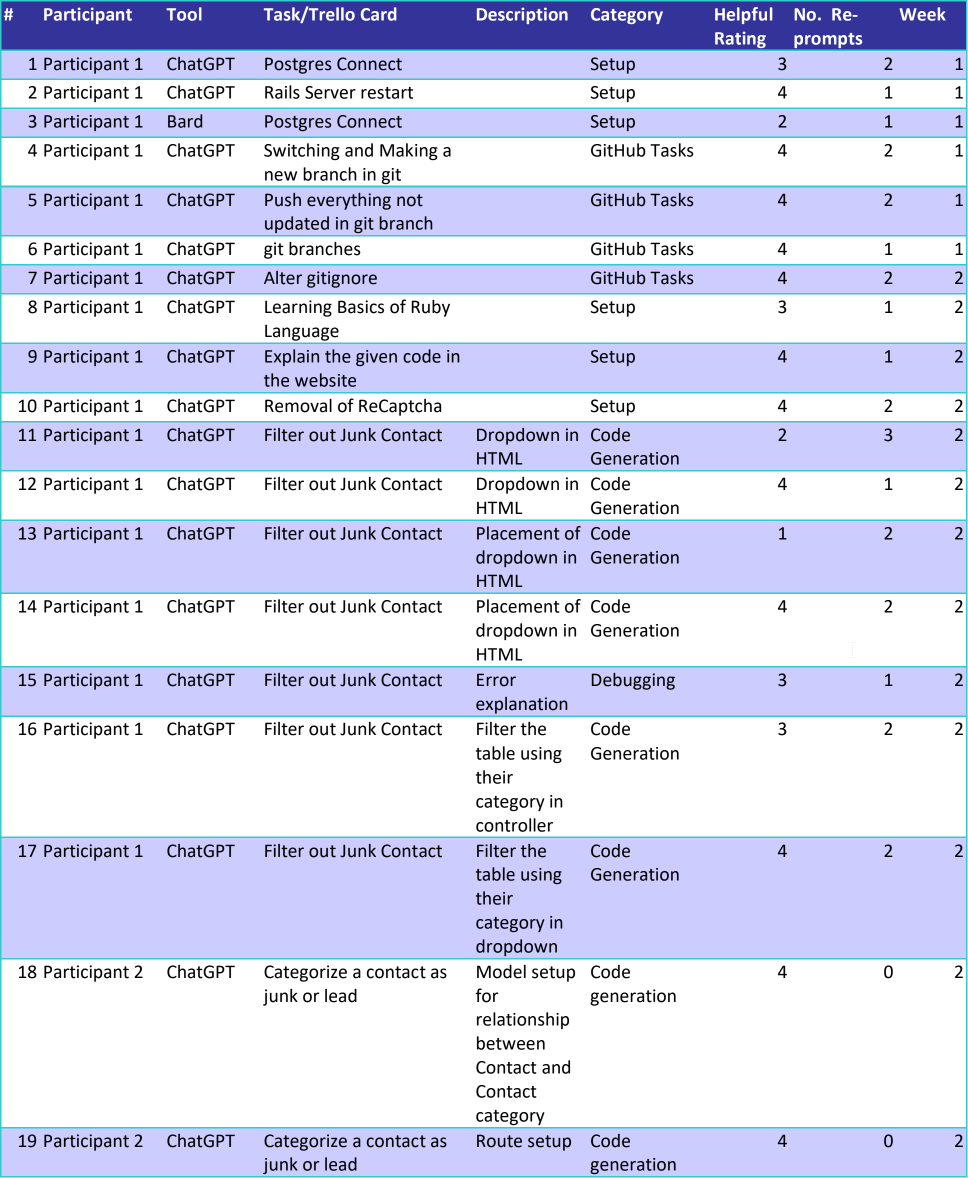

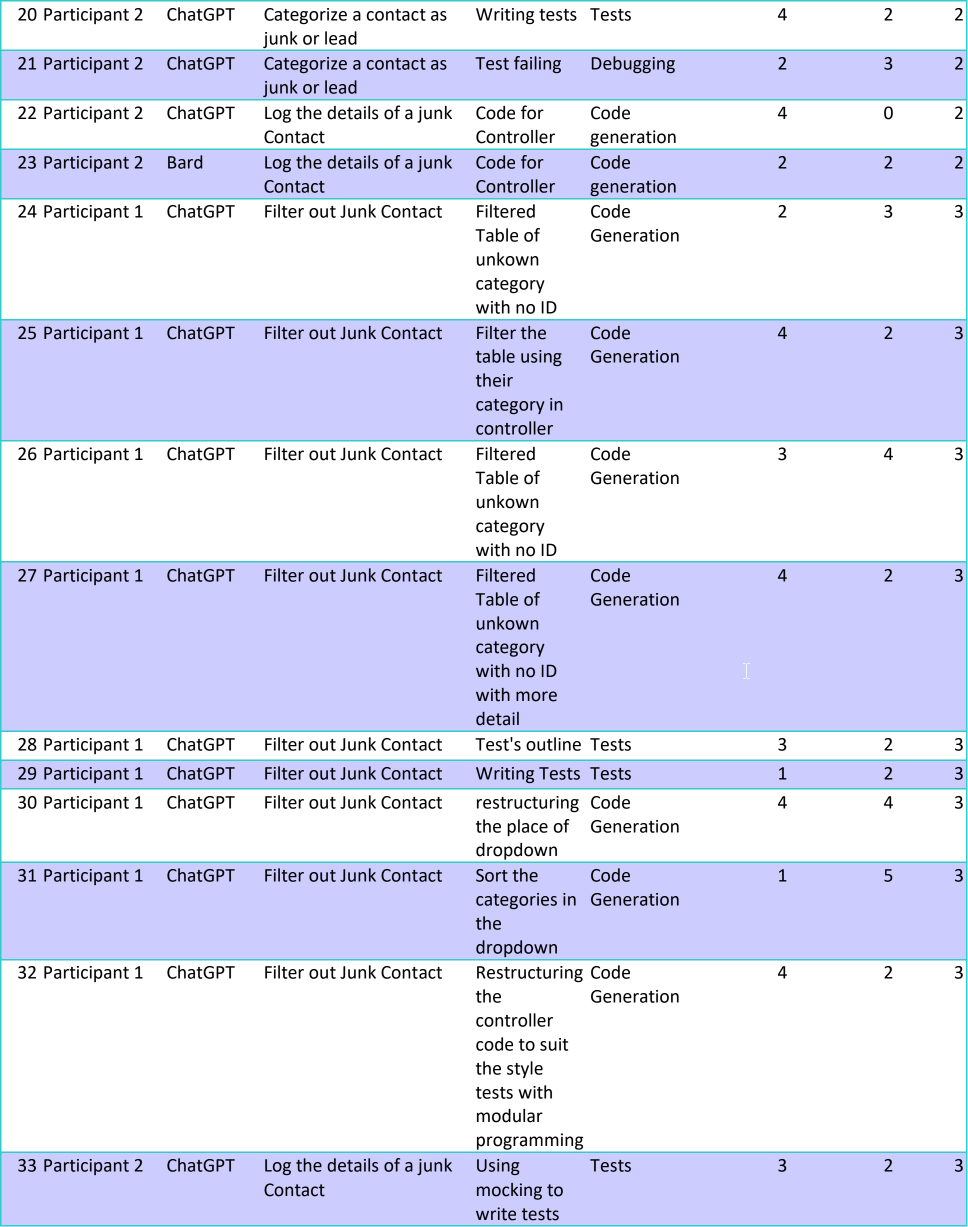

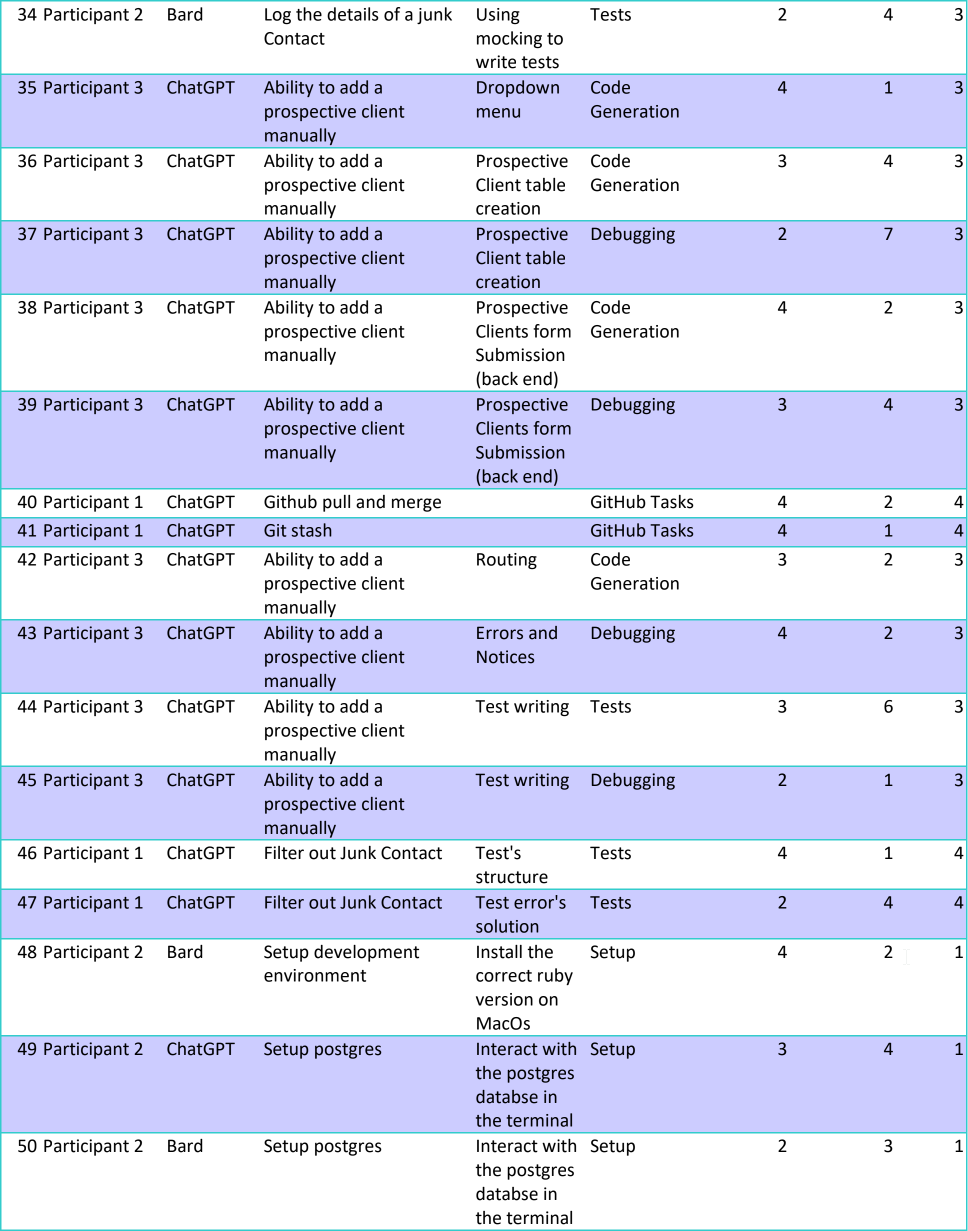

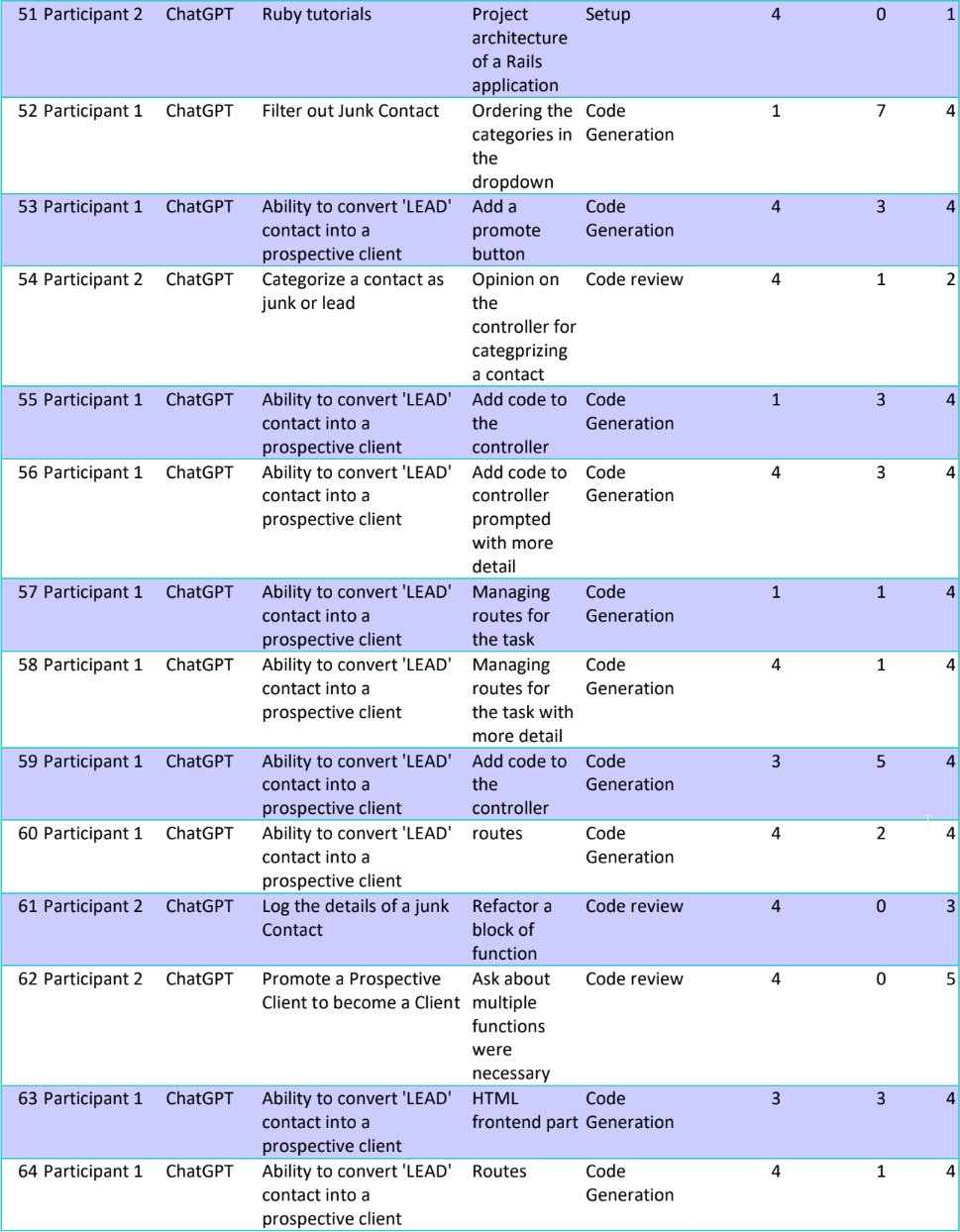

Each participant recorded all the interactions with AI that were related to the feature development of the website in a shared document. All the participants and the supervisors had access to this shared file so everyone can see each other’s documentation. This documentation was later entered into a collective final database (see Appendix) and was used to calculate the number of questions prompted, the number of interactions for each task, the helpfulness of the response, and the number of times the AI was re-prompted with additional context.

3 Results and Analysis

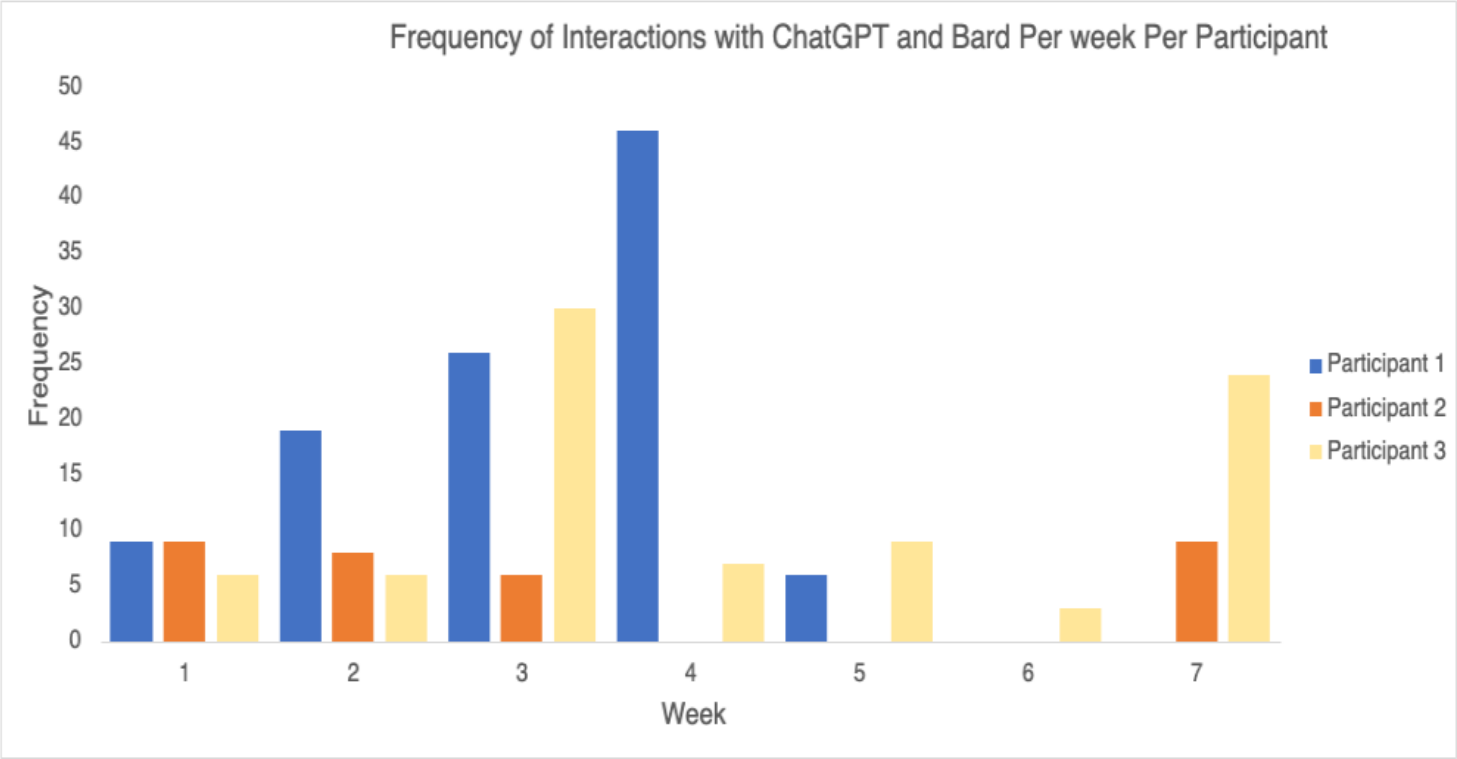

Throughout the study, 104 interactions between the participants were logged, with Participant 1 contributing with 47 interactions, Participant 2 with 20 interactions, and Participant 3 with 37 interactions. Our findings are broken down into two parts: quantitative and qualitative analysis.

3.1 Quantitative Analysis

We are primarily interested in how helpful AI tools are for software development.

3.1.1 AI interactions among all participants

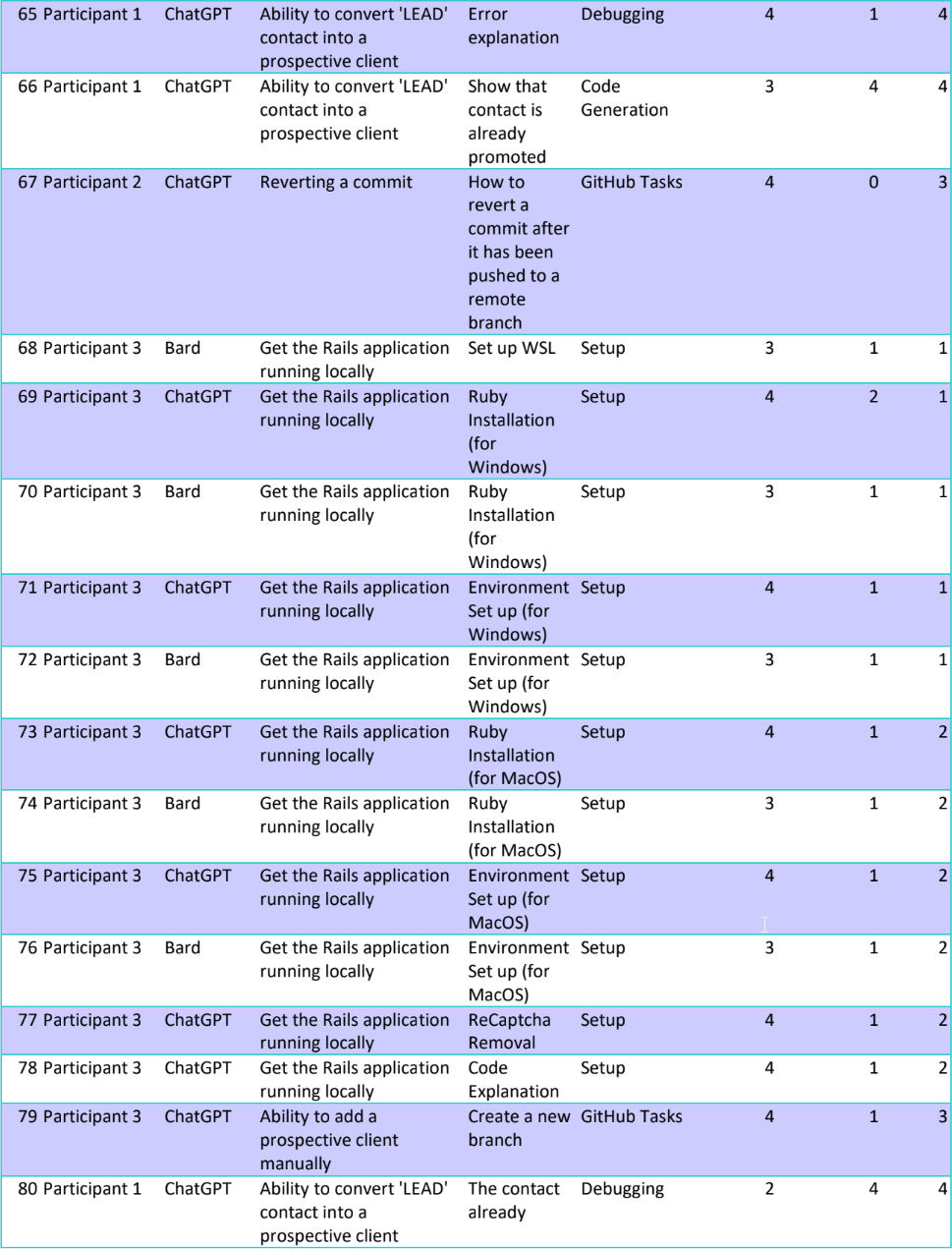

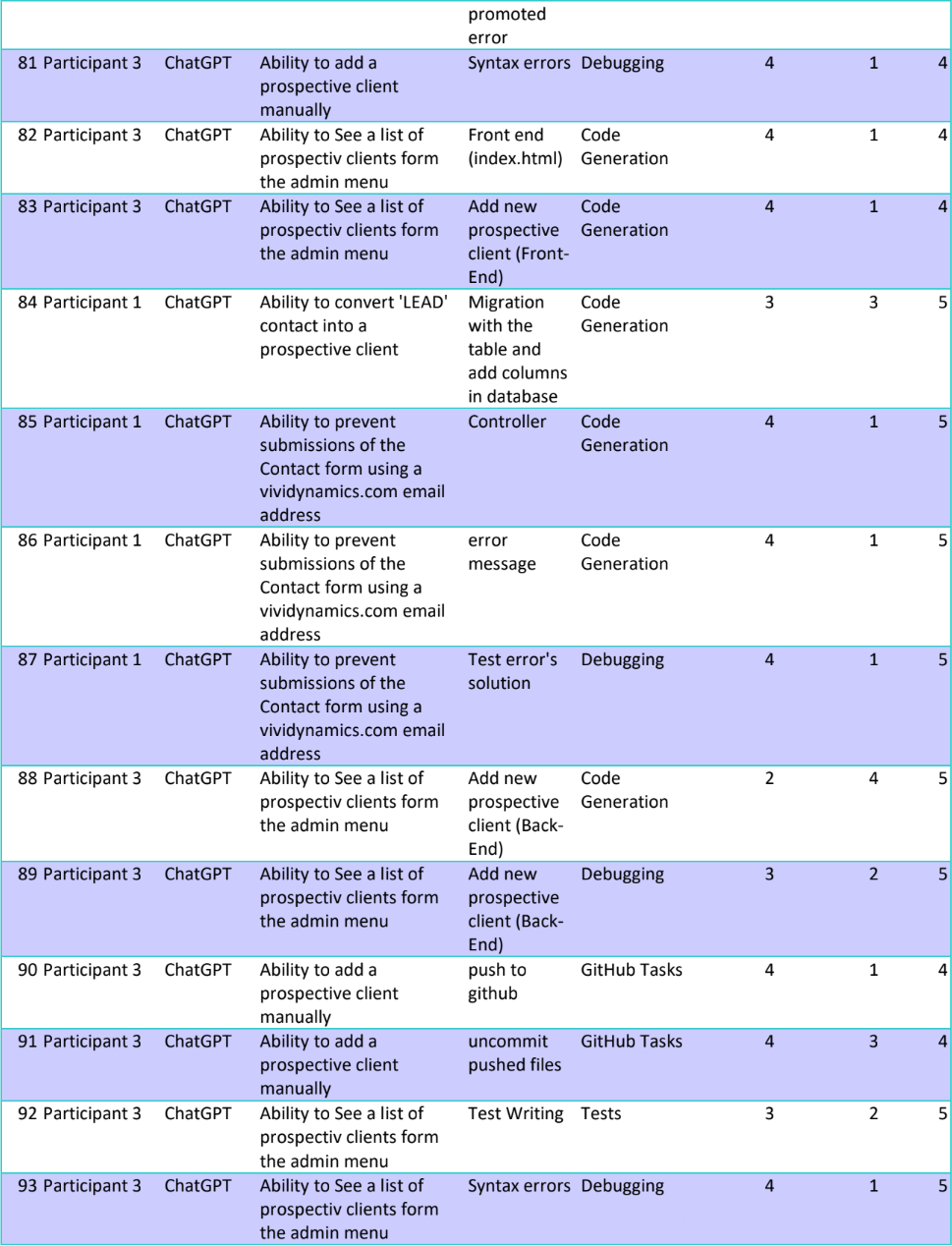

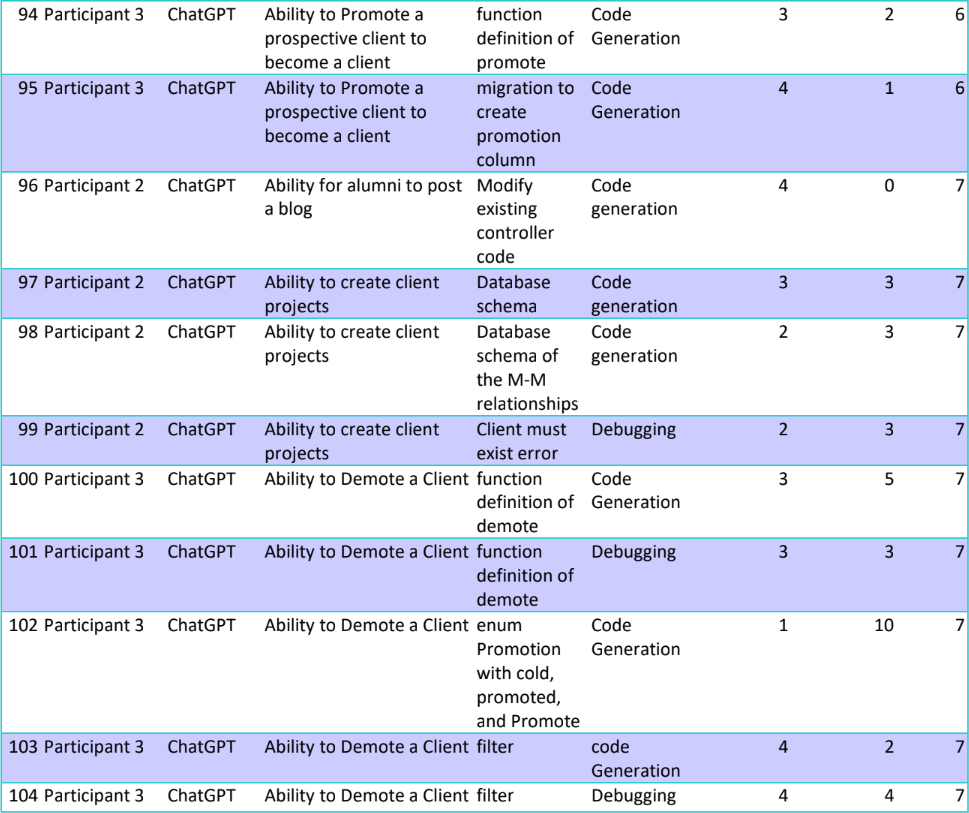

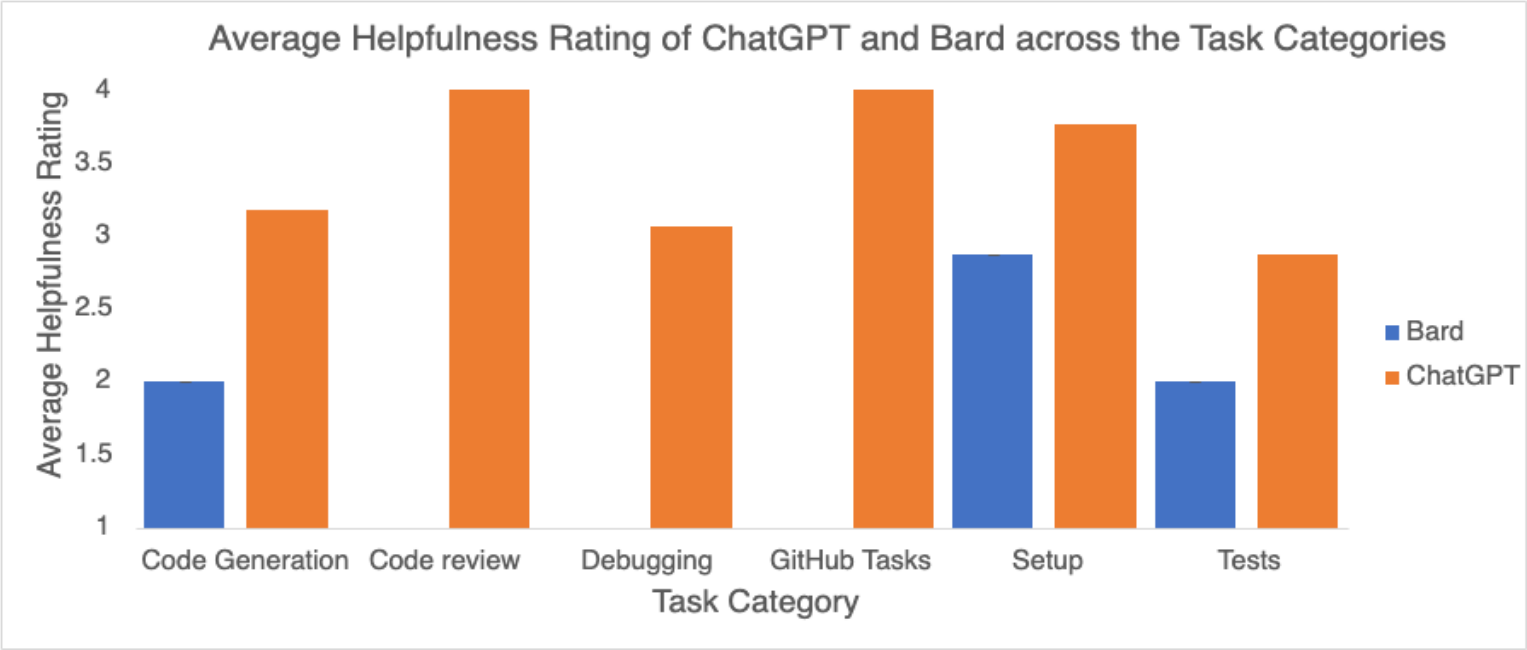

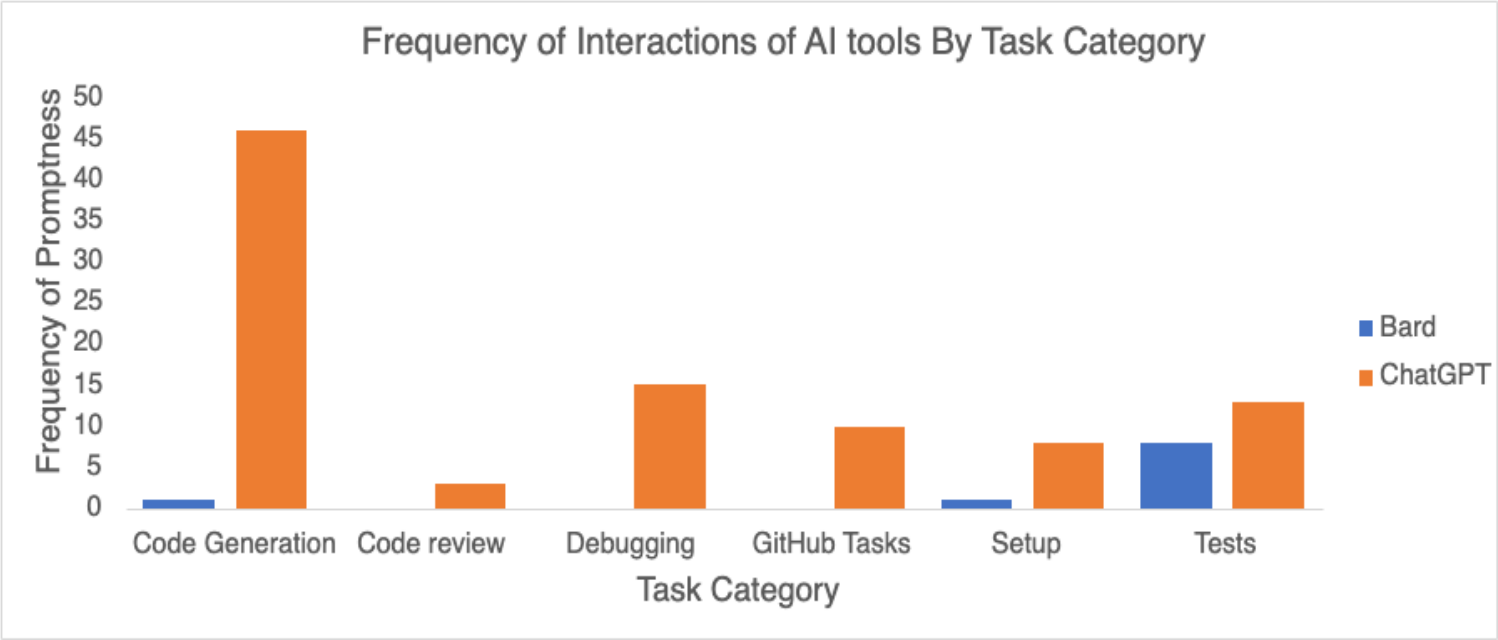

This section contains data of how helpful the AI tools were for each task categories and the frequency of AI interactions among all participants.

Figure 1 compares the average helpfulness ratings of ChatGPT and Bard across six different task categories. From the chart, we can see that ChatGPT consistently received higher ratings than Bard in all categories. For example, ChatGPT was very helpful in GitHub tasks and code review, while it was somewhat helpful in code generation, debugging, environment setup, and testing. On the other hand, Bard was somewhat unhelpful for code and test generation, while it was somewhat helpful in environment setup. Bard was not used in all development setups because ChatGPT was given more efficient and more detailed answers.

Figure 1

Figure 1

Figure 2 compares the frequency of interactions with ChatGPT and Bard across task categories. Notably, ChatGPT was utilized more frequently than Bard. For instance, ChatGPT was consulted 45 times in code generation compared to Bard's 2 times. Also, in the debugging, ChatGPT was asked for assistance 15 times, while Bard was not consulted at all.

Figure 2

Figure 2

The observations made in Figure 1 and Figure 2 can be explained as follows: During the initial phase of the research, the participants faced the challenge of setting up the local environment in RubyMine, an integrated development environment. Two team members who were using the Windows operating system encountered more difficulties as the website's source code was primarily designed for macOS and Linux systems. However, ChatGPT proved to be more helpful than Bard in facing this challenge since it suggested to use to the Windows Subsystems for Linux (WSL) as a solution to get the project to work on a local Windows machine. It also provided detailed explanations and command prompts to paste into the terminal, which resulted in fewer questions asked to set up the environment. In some cases, the answers of ChatGPT and Bard were similar, but ChatGPT quickly became the preferred choice.

As newcomers to Ruby on Rails, participants relied more on AI tools to generate code for various tasks. The frequency of interactions with ChatGPT for code generation was higher because it provided more logical and accurate responses compared to Bard, which sometimes struggled to understand questions or requested additional details. However, participants had fewer interactions with ChatGPT and Bard regarding test writing due to the availability of testing documentation for the project. However, when participants consulted ChatGPT for writing tests, it proved somewhat helpful. For example, one of the participants used the AI to write a test for the "Ability to categorize a Contact as a LEAD", and the AI generated the code necessary to test the feature along with an explanation; in this example, the participant only needed to change variable names to get the code working.

After writing code and tests, the team members had to push their work into GitHub. By this point, none of the team members used Bard because ChatGPT had already proved to be more helpful in previous interactions. Only a few simple questions about Git/GitHub workflow were asked, such as how to add or delete a branch, and how to push or undo commits. After the team learned how to do these version-control-related tasks, they did not need to inquire with the AI again regarding how to use the Git/GitHub features, and so fewer interactions were needed in that category over time.

After a team member pushed work to GitHub, the other two team members reviewed the code, giving feedback on the code quality and logic. The code review rating was helpful and the frequency of interactions with ChatGPT for code review was low. This can be explained by the fact that only one team member used AI to review other team members’ code.

Overall, Figure 1 and Figure 2 show that ChatGPT outperformed Bard in terms of helpfulness ratings and frequency of interactions across different task categories. As a result, participants decided to exclusively interact with ChatGPT for all the remaining tasks.

3.1.2 AI Interactions for Individual Participants

We next drill down to analyze how helpful the AI interactions were for each of the three individual participants.

Figure 3

Figure 3

Figure 3 shows the frequency of interactions of the three participants with ChatGPT and Bard over 7 weeks. Notice that the frequency of interactions initially quickly increased, peaking at week 4, and then decreased. This is because for most Trello cards, the participants needed greater initial assistance to learn how to contribute effectively to the project. In the final week, the team began implementing new features to the website which were not as closely related to prior features, and so they used the AI more frequently for these less-familiar tasks. There was an additional fluctuation of AI interaction frequency depending on how challenging the tasks were to complete. So, when working on a challenging task, like the "Ability to promote a Prospective Client to become a Client" in week 5, Participant 3 asked for more assistance from ChatGPT.

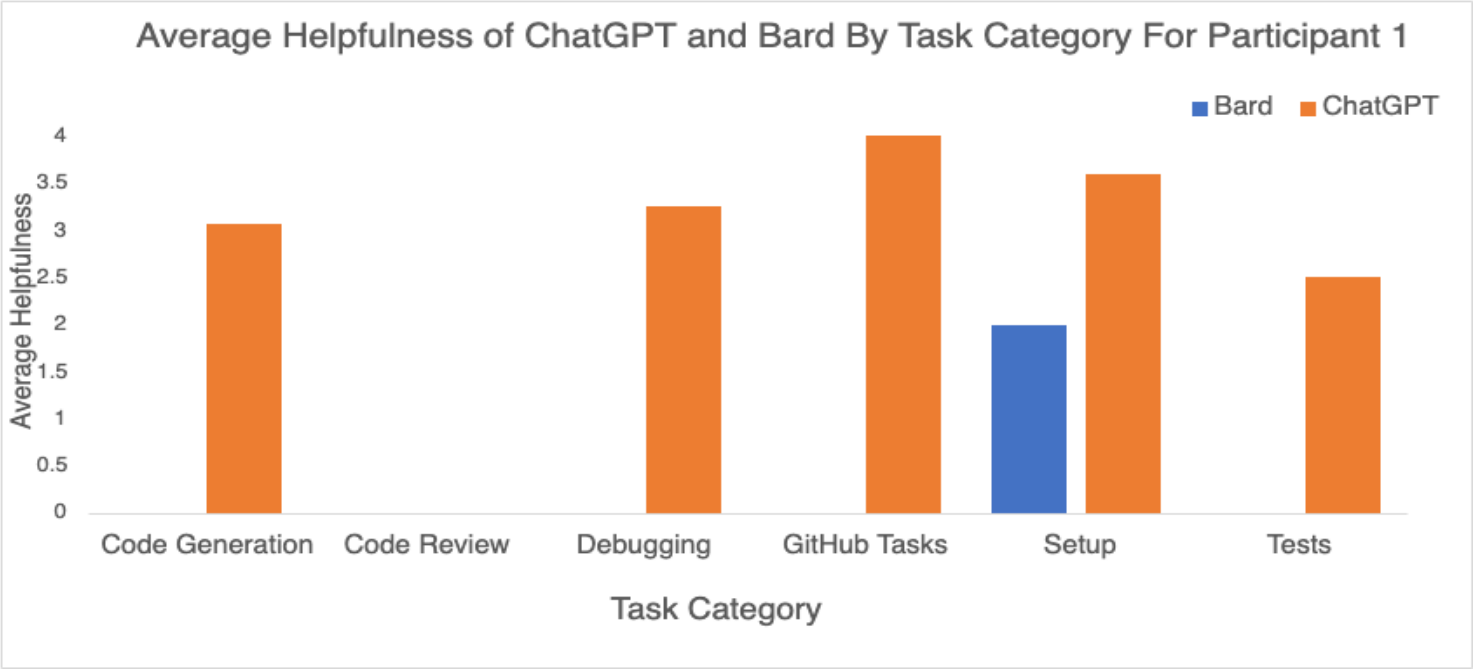

Figure 4

Figure 4

Participant 1’s average helpfulness ratings for ChatGPT and Bard across six different task categories are shown in Figure 4. Participant 1 used both ChatGPT and Bard to set up the environment locally, but then they noticed that ChatGPT was more helpful than Bard, so they only used ChatGPT for the rest of the tasks. Participant 1 also noticed that ChatGPT was very helpful in GitHub and somewhat helpful in code generation, debugging, setup, and tests. This participant did not use any AI tool for code review.

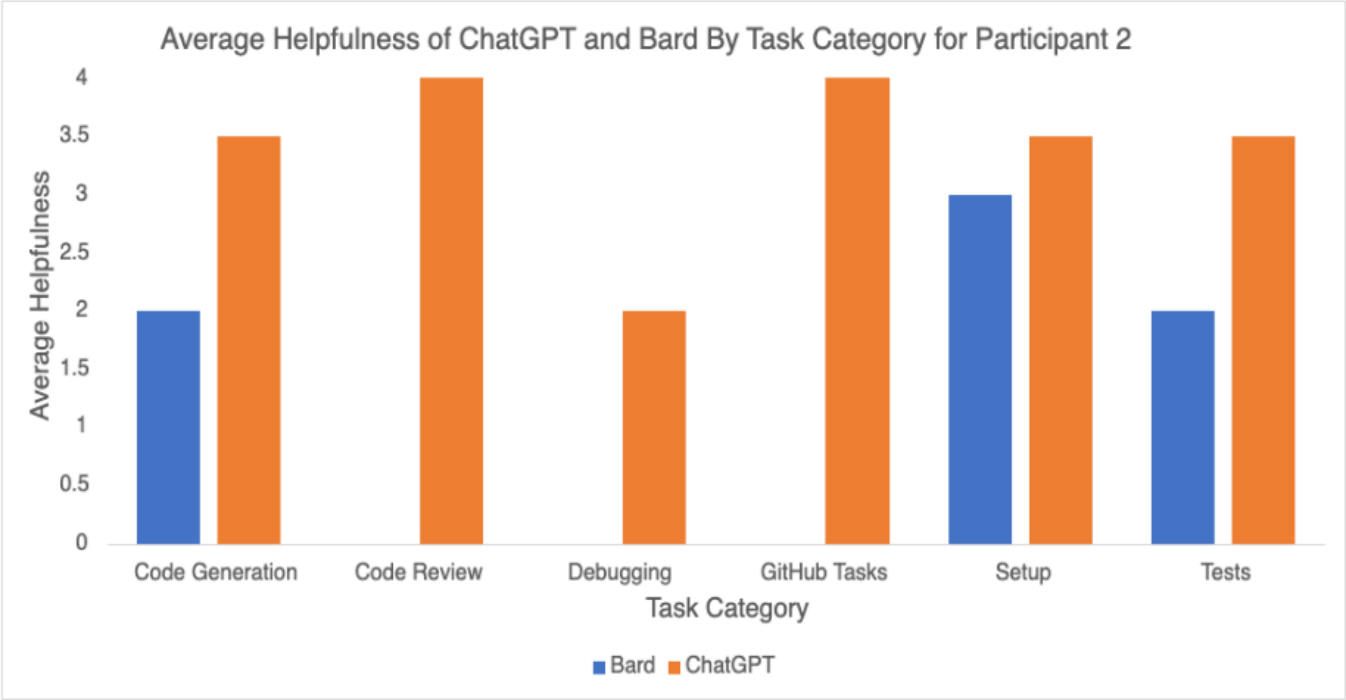

Figure 5

Figure 5

Participant 2’s average helpfulness ratings for ChatGPT and Bard is shown in Figure 5. Participant 2 used both ChatGPT and Bard to set up the environment locally but found ChatGPT was slightly more helpful than Bard. So, the participant continued to use it for code generation and tests for the first Trello card they worked on. However, they noticed that ChatGPT was significantly more helpful than Bard for these categories. So, they stopped using Bard and used only ChatGPT for the remaining Trello cards.

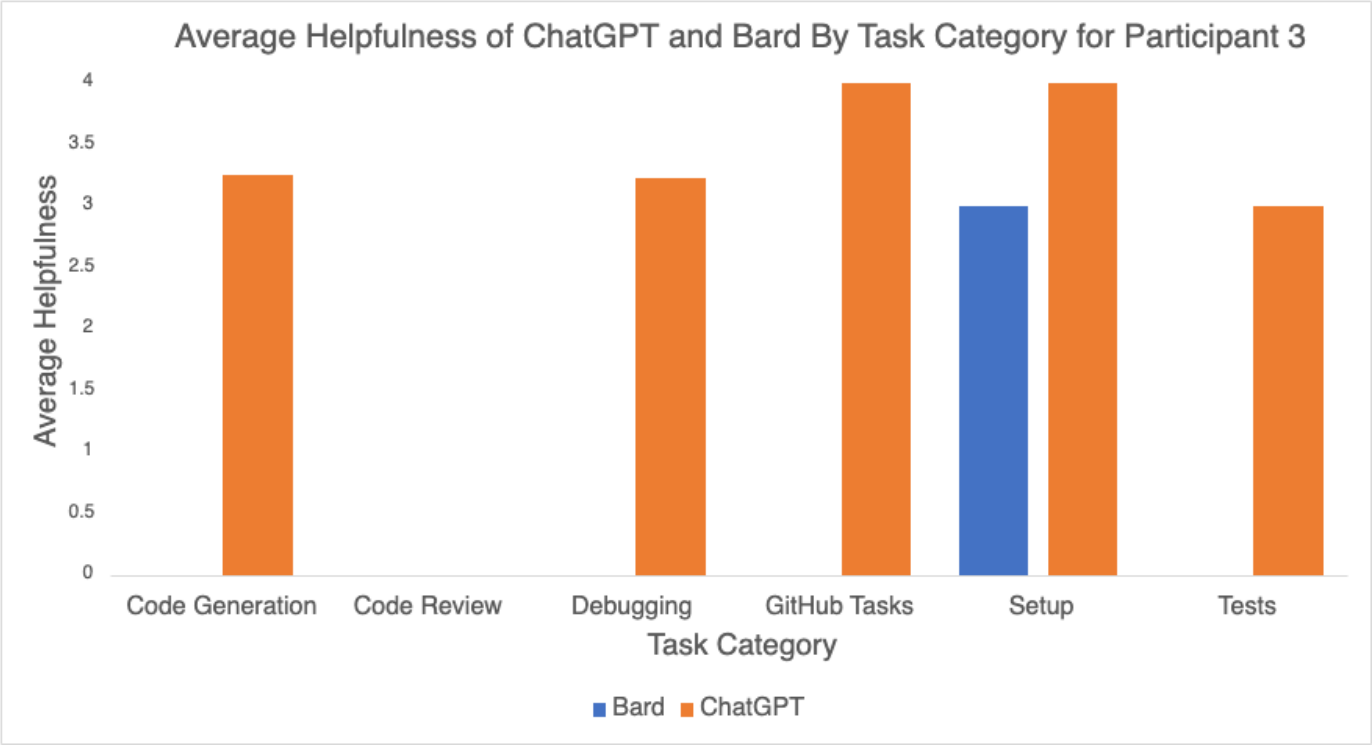

Figure 6

Figure 6

Participant 3’s ratings are shown in Figure 6. Participant 3 experimented with both ChatGPT and Bard for setting up their local environment. However, they observed that ChatGPT was more beneficial in comparison to Bard. As a result, they chose to utilize ChatGPT for the remainder of their tasks. They also noticed that ChatGPT was very helpful in GitHub tasks and setup but somewhat helpful in code generation, debugging, and tests. This participant did not use any AI tool for code review.

Common among all participants is an almost immediate initial observation that ChatGPT outperformed Bard, so much so, that each participant individually concluded to only use ChatGPT for the remainder of their work. This is also observed when we analyze the frequency of interactions in each category for each of the three individual participants, as shown in Figure 7. Once again, notice that Bard is only considered for the initial steps of setup, some code generation, and some tests. Interestingly, the AI tools were most frequently used for code generation. Participant 1 in particular interacted with the AI to a greater extent than the other participants for this category; the primary reason for this is that Participant 1 allocated less time to learn the Ruby on Rails framework and was reliant on ChatGPT for code generation for the features they were tasked with.

Figure 7

Figure 7

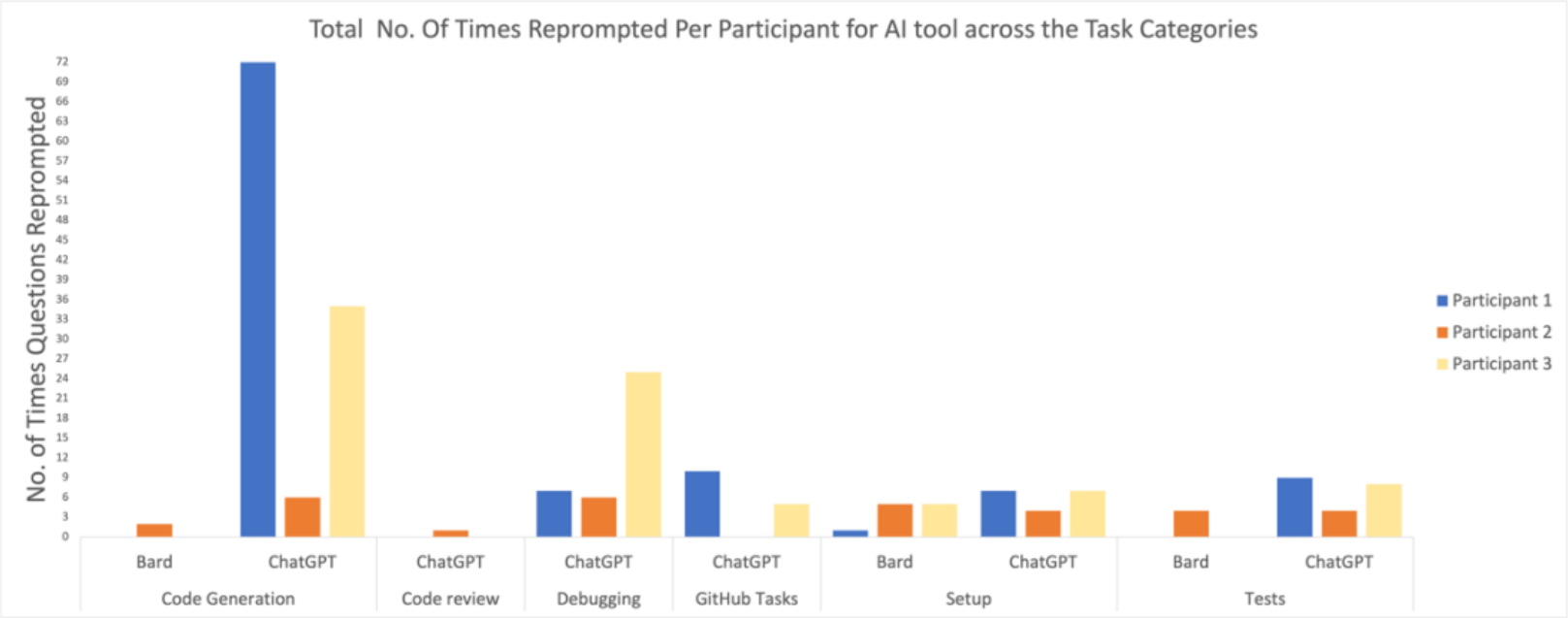

3.1.3 Re-prompting during AI interactions

We next evaluate how often each participant needed to re-prompt the question to ChatGPT or Bard for each interaction. If the original prompt did not result in a satisfying answer, the participant may have re-prompted the AI tool to either provide additional context or ask the question in a slightly different way. Some interactions had a long series of back-and-forth between the participant and the AI, resulting in a larger number of re-prompts. Other interactions comprised of a brief question to the AI tools, where the first response was sufficiently helpful.

As shown in Figure 8, it is evident that the code generation category had the highest frequency of re-prompting for ChatGPT. This could be attributed to several factors. Firstly, code generation tasks are often complex, requiring a clear understanding of desired outcomes and programming concepts. The participants may have faced challenges in formulating their queries or providing sufficient context to ChatGPT, leading to a greater need for re-prompting to obtain accurate code generation responses. Additionally, since the participants are newcomers to Ruby on Rails, they have encountered difficulties due to their limited familiarity with the framework, impacting their ability to communicate effectively with ChatGPT. Lastly, the training data for ChatGPT might not have covered all code generation scenarios in Ruby on Rails, potentially affecting its performance and contributing to the higher number of re-prompts.

Figure 8

Figure 8

3.2 Qualitative Analysis

We now provide details regarding our experience, as shared among the three participants. Using ChatGPT in the coding process was often a shortcut, as it provided ready-made solutions without requiring a deep understanding of the underlying concepts. While this convenience can be beneficial in completing many tasks in a brief time, it also raises concerns about the development of independent coding proficiency. Relying heavily on ChatGPT for writing code in Ruby on Rails may have hindered opportunities to hone software development skills and build a solid foundation of knowledge in the language. Even though the student developers have been coding with Ruby for 7 weeks, they still do not feel independent from ChatGPT. However, prompt engineering (how to ask the right question to get a helpful answer) became a valuable skill. The participants learned that, with the correct prompt, significantly less time is needed to read the documentation.

However, despite some potential drawbacks, ChatGPT had its merits. It significantly improved the experience of software development by providing a confidence boost and was able to provide immediate assistance when development was overwhelming. When faced with complex tasks or unfamiliar challenges, ChatGPT acted as a reliable guide, offering quick and efficient solutions. Its assistance accelerated the overall workflow, enabling developers to accomplish tasks more rapidly than they would have been able to do otherwise. The supervisors were impressed with the ability of the team to quickly begin contributing to an existing software project despite no familiarity nor advice from senior developers, in comparison to prior projects that they have worked with student teams.

The project would have gone differently if the developers had to add functionalities to the Vivi Dynamics website without using any AI tool. Yet, the student developers would not have been as efficient during the seven weeks due to dedicating time to learning how to use Ruby. However, for a longer-term project, it may have been more beneficial to spend time upfront learning how to use the language to complete later tasks at a faster pace.

It is important to strike a balance between utilizing AI tools like ChatGPT and actively engaging in the learning process. While ChatGPT can be valuable, it should not replace the pursuit of a deeper understanding of coding principles and techniques.

3.3 Best Practices

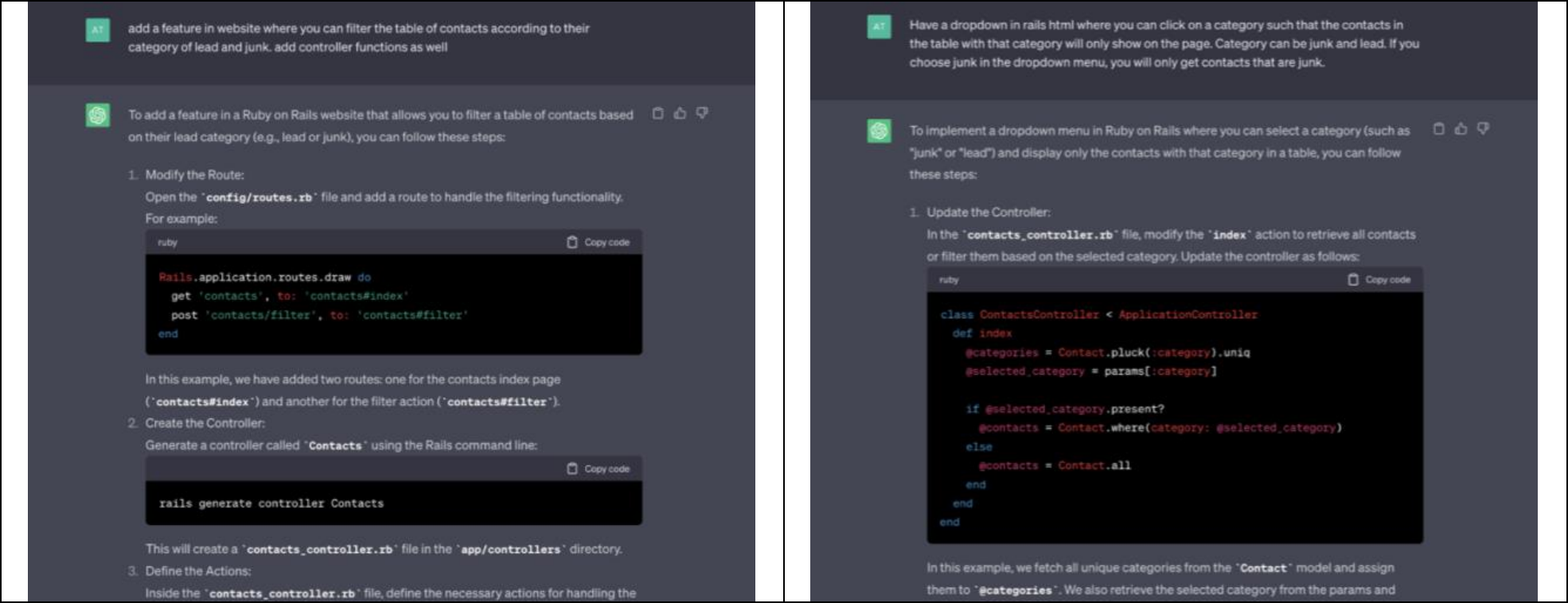

A critical element discovered during the study was the significance of prompt engineering when interacting with ChatGPT. Prompt engineering refers to the strategic construction and refinement of the queries or statements fed into the AI model. The quality and precision of these prompts had a considerable influence on the relevance and applicability of the code or advice generated by ChatGPT.

Through the interactions with ChatGPT, the team observed a direct correlation between the specificity and clarity of the questions that was asked to the AI and the utility of the AI’s responses. When posed with detailed and well-structured questions, the model's responses were more aligned with the project's needs. For example, queries that defined the problem context specified the desired software architecture, and outlined any constraints or requirements yielded more pertinent and effective solutions. On the other hand, vague or overly generalized prompts often led to responses that were either too broad or did not seamlessly integrate with the existing codebase. Thus, it became apparent that the more specific the prompts were, the more beneficial the outputs became for actual deployment in the final code. This can be seen in Figure 9 where the left image shows Participant 1 querying the "Ability to categorize a Contact as JUNK" to a table. ChatGPT provided a detailed response; however, the participant found it only somewhat useful, rating it a 2. Conversely, in the right image, a specific question was asked, even mentioning it was for "rails html". ChatGPT provided a helpful response, earning a rating of 4.

Drawing upon these insights, prompt engineering emerged as a crucial factor for maximizing the utility of AI like ChatGPT in software development. The participants’ experience underscores the need for developers to invest time and thought into constructing clear, detailed, and context-specific queries. This strategy not only improves the chances of obtaining deployable code but also enhances the overall productivity of the development process. This insight is invaluable, especially for junior developers aspiring to leverage AI in their coding journey.

Figure 9

Figure 9

4 Threats to Validity

The authors are not aware of a previous case study that measures the effectiveness of AI tools on software development in this data-driven manner. However, this study has a few threats to its validity.

One threat is that the research lacked a control group, which could have served as a benchmark for the comparison between a team using AI tools for developing the Rails applications versus the team following the traditional learning approach of reading documentation and searching through Stack Overflow.

Similarly, the architecture was already designed by a senior software engineer, hence the team could not assess the impact of AI tools on architectural design, which is a key aspect of software development. Also, the research relied on features which were specified by a software engineer. This was beneficial and accelerated development, but may have limited the exploration of AI tools in working with clients who have little knowledge about software development.

Additionally, Ruby on Rails is a well-established and highly evolved framework, which is known to have extensive documentation and strong community support since it was released in 2004. As a result, these findings may not be generalizable to newer or less documented frameworks since these AI tools were trained on the data available at the time.

Finally, the researchers themselves acted as both developers and research subjects in the study. This dual role may have introduced biases or subjective interpretations of the AI tool's effectiveness.

5 Conclusion

The question of whether senior developers are still needed to mentor junior developers has emerged with the advent of AI tools. However, it is essential to view AI tools as enablers rather than threats to developer roles. While these tools can automate certain aspects of coding, they cannot replace the creativity, strategic thinking, and human judgment that developers bring to the table.

Based on the results and analysis, it is evident that AI tools, especially ChatGPT, can be highly beneficial in specific areas such as GitHub tasks and code review. However, to become a senior developer, the mentorship provided by experienced developers remains indispensable. AI tools alone cannot impart the technical and soft skills required to excel in the field. In other words, while ChatGPT can assist in generating code based on prompts, it falls short when it comes to teaching complex programming languages. It serves as a helpful tool for code generation but does not empower junior developers to independently write code without assistance. The project's participants worked with Ruby on Rails for seven weeks, yet they still felt a lack of confidence in their ability to code without relying on ChatGPT. Therefore, acquiring technical skills necessitates mentorship and hands-on experience guided by senior developers. Beyond technical expertise, soft skills such as teamwork, communication, and project management are vital for becoming a well-rounded senior developer. These skills cannot be effectively taught by AI tools. They are best acquired through real-life experiences and mentorship from senior developers who possess a wealth of knowledge and practical insights. Therefore, there is still a need for senior developers to mentor junior developers. The combined efforts of AI tools and mentorship from senior developers offer a promising pathway for junior developers to grow and thrive in their careers.

Furthermore, while AI tools, like ChatGPT, can accelerate certain coding tasks and offer suggestions, they still lack the creativity and innovative problem-solving skills inherent in human developers. They are proficient at generating code blocks but cannot architect holistic solutions or think creatively. These tools excel in automating repetitive tasks but rely on human developers to connect the generated code blocks cohesively and strategically, and to make any modifications necessary.

While AI tools can automate certain aspects of coding and significantly increase overall productivity, they cannot replace the creativity, strategic thinking, and human judgment that developers, both junior and senior, bring to the table.

To advance the field, future work could include additional studies that have a control group with the role of developing software using newer languages/frameworks of varied ages, with project requirements provided by a client without development expertise. This will help assess how AI tools can adapt and provide support in the fast-growing pace of software development, as well as explore the impact of AI tools on architectural design. Finally, to ensure unbiased and reliable results, it is recommended to separate the roles of researchers and research subjects in future studies that involve evaluating the effectiveness of AI tools in software development.

6 Appendix